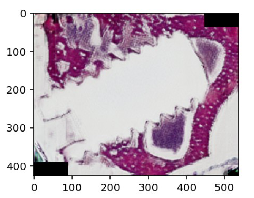

pseudo-histology synthesis training from corresponding micro-CT slices on manually registered samples

Documentation: https://gitlab.desy.de/MDLMA/histology

Source: https://gitlab.desy.de/MDLMA/histology

Author: C.Lucas (Hereon)

Jupyter: available as a jupyter kernel (pytorch) on https://max-jhub.desy.de

Maxwell: mamba activate /software/jupyter/.conda/latest/pytorch

Module: module load maxwell mdlma/histology

Scripts: the scripts are available on Maxwell at /software/mdlma/histology and include in the module-path

Description:

This module contains the scripts to run the pseudo-histology synthesis training from corresponding micro-CT slices on manually registered samples.

It uses the cycleGAN implementation from Erik Linder-Norén and further adds an extra reconstruction loss on the B modality fake into the generator loss formula.

The module consists of:

- train.py main training script to be run on the "train_dir" data (see settings.py)

- settings.py contains the training parameters to be defined beforehand

- model.py contains the ResNet-based generator and discriminator model definitions

- data.py contains the data loader for training and test

- pytorch_train_maskOutInnerScrew contains all manually registered 8 cases with large parts of the screw area masked out to cope with the imbalance of tissue prevalence in the images and weight down the screw area dominance

- pytorch_train_noMask contains all 8 cases with with only the background being masked out

- test.py test script to load the models and test them on the "test_dir" data

- test-volume.ipynb ipython notebook to test on original volume data, and not the manually registered - thus it includes several manual corrections due to the different value range / data type of the training data

- utils.py utility functions required for training or visualization